2Q 2021 | IN-6124

Registered users can unlock up to five pieces of premium content each month.

Vision as the Future of Perception for Mobile Robotics |

NEWS |

In ABI Research’s recent competitive assessment on autonomous material handling robots, it was found that the most successful Autonomous Mobile Robots (AMR) vendors currently, generally utilize SICK AG LiDAR sensors alongside a 3D camera. Sensor fusion is thus an increasingly common reality for navigation and perception in the mobile robotics space. That being said, a small number of companies are offering mapping, positioning and perception (in effect the full SLAM capability) purely through the use of cameras. Among these include Seegrid, 634 AI, Sevensense, and Gideon Brothers, although others are entering the market.

The Vision vs LIDAR Debate |

IMPACT |

The debate around vision-based vSLAM vs LiDAR-enabled SLAM as a way to help robots navigate has been waged since iRobot challenged Neato’s LiDAR robots with its own ceiling based visual navigation. Just as in the consumer space, LiDAR continues to play a critical role for localization, mapping, and navigation for autonomous mobile robots. The reasons for this are thus:

- LiDAR is more accurate from a flat 2D angle. Because of increased sensitivity, maps are created faster

- LiDAR does not require good lighting to navigate

- LiDAR provides added redundancy to vision-based solutions

- The SICK AG sensors are certified to a higher degree than vision-only solutions

Ultimately, 2D LiDAR is a staple of modern AMRs, and with the safety certification credentials of products offered by German LiDAR manufacturer SICK AG, they are considered paramount for most AMRs. Unfortunately, LiDAR also comes with its own weaknesses, including:

- Much less data is collected in comparison to camera-collected data

- 2D LiDAR struggles when it comes to uneven surfaces

- LiDAR often needs reflectors in the form of external infrastructure

- More capable 3D LiDAR systems are incredibly expensive

By contrast, vision-centered solutions have some clear advantages:

- Vision is future proof. Because of additional data capture, vision-based systems provide a superior data set for increasingly capable AI-based processing to train algorithms

- Vision can potentially, given the right algorithms, be used to as a platform for AI-enabled semantic applications that allow the robot to understand their environment at a much deeper level. This entails techniques like panoptic segmentation, meaning a robot can segment between workstations, the floor, ceilings, walls, other vehicles, and humans

- As end users come to expect more from AMRs, this new layer of detail will begin to carry added business value

But vision itself comes with challenges:

- Lack of technical safety assurance

- Relative lack of accuracy compared to camera / LiDAR combinations. Most vendors adopt a sensor fusion configuration that utilizes both vision and LiDAR for safety. This adds security but also increases cost

- Environmental challenges and lighting

Currently, in the consumer space, companies have decided to combine LiDAR and cameras. Ecovacs, a chinese supplier and the second largest seller of home robots globally, is using LIDAR for main navigation and has a front facing camera for visual interpretation. This additional vision capability means the Ecovacs system can avoid socks and cords that are too light for bump sensors to measure. Many commercial AMR vendors, like Fetch Robotics, are attempting something similar in sensor fusion, albeit for a much greater price tag.

But what about companies interested solely in vision? The market for this space is heating up, with a number of vendors beginning to enter the market.

Going Forward for Vision-Centric AMRs |

RECOMMENDATIONS |

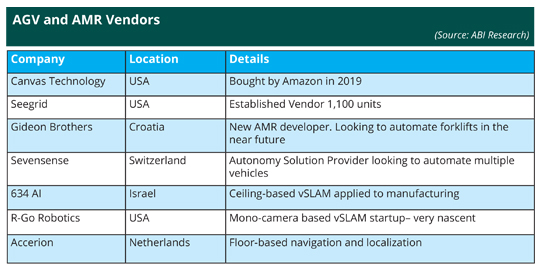

The vendor list for vision-centric Automated Guided Vehicles (AGV) and AMR vendors can be seen below. This is primarily a market centered around the United States and Europe. Canvas Technology is one of the most important as they were acquired by Amazon in 2019. While their footprint was minimal as an independent company, they will inevitably feature in Amazon Robotics’ next generation of material handling robots.

Seegrid is a more established vendor that utilizes vision-based perception to navigate fixed pre-recorded routes. They recently acquired a 3D LiDAR startup called Box Robotics. This suggests that they will be using sensor fusion going forward for their next range of products.

Gideon Brothers is a smaller vendor in Croatia that is developing LiDAR-free SLAM-based navigation. They are in talks with various forklift manufacturers to develop autonomous material handling systems. A company with a similar plan is Switzerland-based Sevensense, who have leased out their technology stack to automate quadrupeds from ANYBotics.

634 AI are a new start up who have developed autonomous forklift solutions based on ceiling-based vSLAM. This applies techniques from the consumer market and scales it up to industry, and a ceiling-centric navigation solution means the ability to drastically reduce onboard sensor hardware on individual robots. 634 AI’s aim is therefore to use vision in a new infrastructure-based way to enable cost savings. In a similar fashion, Accerion develops camera-based solutions for AMRs that are placed on the robot’s base and scan the floor, providing millimeter level positioning. This is currently being used as a way to improve localization accuracy, but the company’s aim is to use the same technique for all navigation purposes. The least is known about RGO Robotics, an American-Israeli startup that hopes to be a comprehensive autonomy solution provider (ASP) that develops high-end vision-based perception for the entire robotics market going forward.

ABI Research recently conducted a competitive assessment of the major autonomous material handling robot vendors (CA-1271). While vision-centric vendors like Gideon and Seegrid rank highly in terms of innovation, they have not yet implemented their products to the same degree as vendors focused on sensor fusion and more rudimentary techniques, like external infrastructure (bar codes and magnets).

As this new round of vision-centered AMR companies develop, onlookers should consider what the platform enabled by additional data creates.