Autonomous Driving Sensors: Essential Improvements for Self-Driving Vehicle Adoption

The race toward fully autonomous vehicles depends on smarter, more capable sensors. As camera technologies evolve, automakers are pushing for higher resolution, greater dynamic range, and more integrated monitoring systems to make self-driving cars safer and more reliable. This article explores the essential sensor improvements needed for widespread adoption of autonomous driving.

Log In to unlock this content.

You have x unlocks remaining.

This content falls outside of your subscription, but you may view up to five pieces of premium content outside of your subscription each month

You have x unlocks remaining.

Key Takeaways:

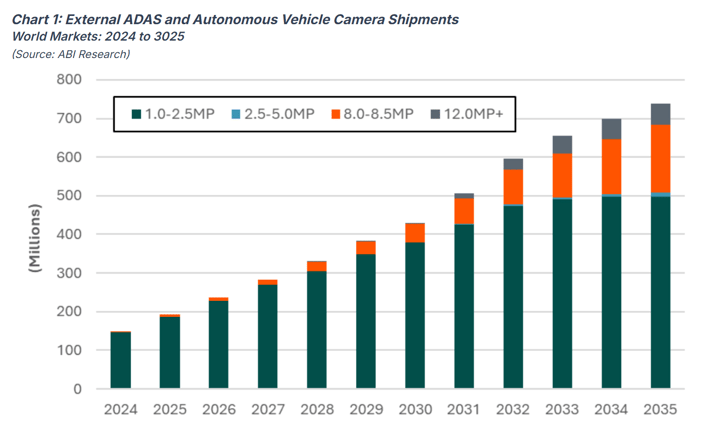

- Autonomous vehicle camera demand is accelerating. External sensor shipments will increase from 193 million in 2025 to nearly 738 million in 2035, driven by the shift from basic safety systems to advanced driver assistance and piloted automation.

- Higher-resolution imaging is essential for safety. Automakers are moving from 1.7 Megapixel (MP) sensors to 8 MP and beyond to deliver sharper, more accurate object detection for autonomous driving functions.

- Improved dynamic range enables better visibility. Future sensors must reach 150–160 Decibels (dB) to handle extreme lighting contrasts; for instance, driving from bright sunlight into dark tunnels without losing accuracy.

- Combining driver and occupant monitoring is a long-term goal. While most vehicles still use separate sensors, advances in camera design may soon allow a single system to monitor both driver behavior and cabin occupancy.

Autonomous driving camera sensors are set to grow at a Compound Annual Growth Rate (CAGR) of 15% between 2025 and 2035, with the pivot from active safety to piloted assistance systems stimulating the market from 2027. According to ABI Research market forecasts, external Autonomous Vehicle (AV) sensor shipments will increase from 193 million in 2025 to nearly 738 million in 2035.

Cameras have been widely adopted in active safety Advanced Driver-Assistance Systems (ADAS), with low-cost Complementary Metal-Oxide-Semiconductor (CMOS) image sensors a key factor in the democratization of ADAS. However, the modality has a number of well-known shortcomings that have typically been addressed through sensor fusion.

ABI Research Director James Hodgson says, “Alongside sensor fusion, the automotive industry is pushing for improved inherent capabilities in automotive imaging sensors, particularly with the advent of End-to-End (E2E) Artificial Intelligence (AI) approaches, and the need to supply the transformer with as much detail as possible.”

The two primary improvements required for Autonomous Vehicle (AV) imaging sensors over more basic active safety sensors are in resolution and dynamic range. In the longer term, there may be demand for a consolidated Driver Monitoring System (DMS)/Occupant Monitoring System (OMS) that leverages a single sensor.

Table 1: Autonomous Vehicle Imaging Sensor Evolution—Resolution and Dynamic Range Targets

|

Parameter |

Current (ADAS) Sensors |

Target (Autonomous Driving) Sensors |

Example Applications |

|

Resolution |

1.3–1.7 MP |

8.0–12.0 MP |

Audi A8 Traffic Jam Assist (1.7 MP), Mercedes-Benz DRIVE PILOT (8.0 MP) |

|

Dynamic Range |

120–140 dB |

150–160 dB |

High-contrast lighting (e.g., entering/exiting tunnels) |

|

Sensor Type |

CMOS |

Advanced HDR CMOS/AI-enhanced imaging |

Autonomous perception and safety validation |

Higher Resolution

The most important improvement that is required for autonomous driving sensors is increased image resolution. Conventional active safety image sensors have been selected to deliver on safety mandates and European New Car Assessment Programme (NCAP) assessments at the lowest price possible. Therefore, most AV software like the 2017 Audi A8 Traffic Jam Assist system and the early Level 2+ Ford Blue Cruise system use sensors within the 1.3 to 1.7 MP range.

Imaging sensor resolutions need to be upgraded for full self-driving applications.

Generally speaking, automakers are increasingly selecting vision camera resolutions within the 8.0-MP range. Notable early examples include the Mercedes-Benz DRIVE PILOT (8.0 MP), BMW Highway Assist (8.3 MP), and Mercedes-Benz Intelligent Drive (8.0 MP) system.

These higher resolutions provide the clarity and range to accurately classify objects within the environment.

On the downside, the typical location of the forward-facing camera modules behind the windshield makes achieving higher resolutions difficult, due to the temperature challenges. Nevertheless, 12-MP cameras could play a role in unsupervised and robotaxi applications in the longer term, where the emphasis placed on avoiding any high-profile accidents incentivizes an overkill approach to technology specification.

Higher Dynamic Range

Another key improvement that AV applications will require is improvements in dynamics range. Vehicles are often in situations where there is strong contrast between light and dark, making object detection more challenging. For example, an AV entering a tunnel must be able to identify a stationary vehicle that’s already in the tunnel, which is only possible if the image sensor has High Dynamic Range (HDR). Currently, capturing HDR images is a key limitation for intelligent driving.

The typical ADAS sensor of between 120 dB and 140 dB struggles to identify objects in these high contrast areas, posing significant safety risks. Through interviews with automotive industry stakeholders, ABI Research has learned there is an expectation for imaging sensors to achieve 150 dB to 160 dB for facilitating safe vehicle maneuvering in scenarios where there are drastic differences in ambient lighting.

Consolidated DMS/OMS

The Driver Monitoring Systems (DMS) and Occupancy Monitoring System (OMS) are responsible for detecting driver gaze, distractions, seatbelt status, and other vehicle safety information. Due to regulations like the General Safety Regulation 2 (GSR2) in Europe, the DMS offers the larger market opportunity for the foreseeable future. Moreover, sensor engineering for DMSs is simpler than for OMSs.

For an OMS, image sensors need to be capable of viewing the entire cabin, with a wide Field of View (FOV) being essential. In many cases, a Three-Dimensional (3D) Time of Flight (ToF) camera is used, with a resolution typically greater than 2.5 MP.

Recent technological advancements have made it possible for the use of a single camera that performs both DMS and OMS functions. However, this is a very challenging engineering endeavor, so it remains nascent. The consolidation of DMS and OMS is a longer-term opportunity, with most AV makers opting for two separate imaging sensors.

Get the Report

For a more detailed analysis of autonomous driving sensor improvements needed for the wider adoption of AVs, download ABI Research’s report, Autonomous Vehicle Sensors: Image Sensor Requirements in Autonomous Vehicles.

Companies Mentioned: OMNIVISION, onsemi, Sony, Samsung, Mercedes-Benz, BMW, Audi, Ford

Frequently Asked Questions

1. Why are advanced sensors important for autonomous vehicles?

Advanced sensors are critical for Autonomous Vehicles (AVs) because they help the car perceive its surroundings accurately. High-resolution cameras, radar, and Light Detection and Ranging (LiDAR) systems enable better object detection, distance measurement, and decision-making, improving safety and reliability on the road.

2. How are camera technologies evolving for self-driving cars?

Camera technologies are advancing with higher resolutions, wider dynamic ranges, and improved low-light performance. New designs integrate multiple cameras and monitoring systems to deliver richer, more detailed environmental data, which is essential for safe autonomous driving.

3. What challenges must be overcome for fully autonomous driving?

Key challenges include ensuring sensor accuracy in all weather and lighting conditions, reducing hardware costs, improving real-time data processing, and achieving full system integration between sensors, software, and vehicle controls.

4. What improvements are needed for widespread adoption of autonomous vehicles?

To reach mass adoption, autonomous vehicles need smarter sensor fusion, more powerful AI processing, better calibration between cameras and other sensors, and rigorous safety validation. These advances will help automakers deliver safer, more dependable self-driving systems.

Related Research

Report | 2Q 2025 | AN-5925

Related Service

- Competitive & Market Intelligence

- Executive & C-Suite

- Marketing

- Product Strategy

- Startup Leader & Founder

- Users & Implementers

Job Role

- Telco & Communications

- Hyperscalers

- Industrial & Manufacturing

- Semiconductor

- Supply Chain

- Industry & Trade Organizations

Industry

Services

Spotlights

5G, Cloud & Networks

- 5G Devices, Smartphones & Wearables

- 5G, 6G & Open RAN

- Cellular Standards & Intellectual Property Rights

- Cloud

- Enterprise Connectivity

- Space Technologies & Innovation

- Telco AI

AI & Robotics

Automotive

Bluetooth, Wi-Fi & Short Range Wireless

Cyber & Digital Security

- Citizen Digital Identity

- Digital Payment Technologies

- eSIM & SIM Solutions

- Quantum Safe Technologies

- Trusted Device Solutions