By James Hodgson | 1Q 2021 | IN-6072

Registered users can unlock up to five pieces of premium content each month.

Rich Autonomous Functions under Driver Supervision |

NEWS |

The SAE Level 2+ (L2+) trend continues to gain traction in the automotive market. Systems developed by Tesla, Mobileye, and NVIDIA are set to deliver a comprehensive set of autonomous driving functions, including urban driving, highway automation, lane changes, and merges, etc.—features that are more often associated with a fully autonomous vehicle or robotaxi. The critical difference between these L2+ vehicles and the typical Level 3 or Level 4 vehicle is the role that the driver plays in providing redundancy to the L2+ system. The driver retains responsibility for the vehicle, allowing automakers to begin deploying and monetizing their autonomous vehicle investments without exposing themselves to liability, or waiting for a step change in any laws that may preclude deployment of unsupervised autonomous functions in the targeted region.

This raises an important question: what components are needed to deliver a vehicle with rich autonomous functions, but with redundancy provided by the driver? Which of the technologies seen of the typical unsupervised L4 prototype will ultimately prove unnecessary in the context of supervised L2+ automation?

Winners and Losers |

IMPACT |

- AV SoCs: A clear winner in the L2+ trend are AV SoC vendors. Systems such as Intel Mobileye’s EyeQ5 and NVIDIA’s Xavier have been selected by OEMs and Tier Ones to enable L2+ automation. Both of these systems have also been selected to underpin unsupervised autonomous systems further in the future. NVIDIA’s flagship Orin SoC will also initially support L2+ functionality on Mercedes-Benz models, with headroom for unsupervised functionality to be enabled Over-the-Air (OTA) at a later date. Therefore, L2+ systems that are as feature-rich as L4 counterparts will mandate reuse of the same processors, even if the driver provides the required redundancy.

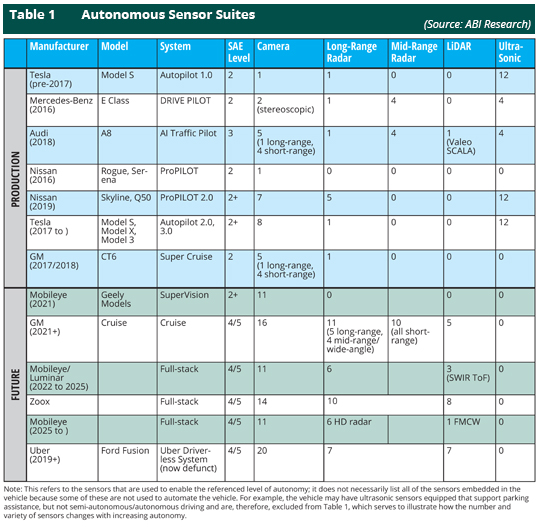

- Sensors: The wide range of autonomous functions delivered by L2+ vehicles will mandate 360-degree functionality. Therefore, while a conventional L2 system, such as the original Nissan ProPILOT system, may only make use of a single camera sensor, L2+ systems from Tesla (8 cameras) and Mobileye (11 cameras) feature a number of cameras typically seen on L4/5 prototypes from the likes of GM’s Cruise (16 cameras) and Amazon’s Zoox (14 cameras). Therefore, the 360-degree perception requirement will drive an increase in the volume of sensors used (compared to legacy L2), but it will not necessarily drive an increase in the variety of sensors used. The biggest loser of the L2+ trend will be LiDAR. This tertiary sensor type has been developed to provide an extra level of redundancy to the radar-camera sensor fusion status quo. This novel sensor modality continues to command an ASP 10X higher than radar, further cementing L2+ as the automotive industry’s easy win.

- Mapping: While the driver plays an important role in providing redundancy, a second input will come in the form of a semantically rich digital map. A crowdsourced/user-generated map built from sensor data ingested from connected, sensor-equipped vehicles will provide a vital second opinion to the ego vehicle’s perception system. Tesla previously adopted this approach to improve the performance of its radar sensors.

Tesla compiled a digital map layer of radar responses to static street furniture, leveraging its fleet of radar-equipped vehicles to construct this map layer. Comparing the radar responses from the ego vehicle to these user-generated/crowdsourced maps allows` for better filtering of false positive braking events, and better distinguishing between stationary vehicles and static overhanging street furniture. While L2+ systems may not provide a market potential for HD, LiDAR-based maps, it will accelerate the use of crowdsourced sensor data to add redundancy to the perception stack.

- Software: The definition of L2 automation is broad, a combination of longitudinal and lateral assistance under driver supervision. Within this broad context, a host of autonomous functions are possible, from automatic lane changing at highway speeds to low-speed autonomous parking maneuvers. Therefore, there is a considerable market potential for functional OTA updates adding new features that add to the driver’s convenience while maintaining their responsibility.

- Driver Monitoring Systems: A clear beneficiary of the L2+ trend will be DMS vendors. Any serious L2+ offering must have a robust means of ensuring that the driver is playing their role and providing the required redundancy. NVIDIA has developed a suite of driver monitoring capabilities as part of its DRIVE IX solution, while Mobileye has partnered with specialist DMS software providers such as Cipia.

Diverging Sensor Requirements |

RECOMMENDATIONS |

A critical feature of the L2+ trend will be the impact on the market potential for higher levels of automation. On the one hand, the scaling of key autonomous vehicle components such as SoCs and digital maps in L2+ contexts will accelerate the maturity of more highly automated vehicles. Conversely, the marginal returns and greater risk associated with L3 compared to L2+ will see the market split effectively between supervised feature-rich systems (L2+) and unsupervised feature-rich systems (L4/5). Meeting the redundancy requirement of unsupervised automation will be a significant challenge, requiring three-fold sensor redundancy in every field of view around the vehicle. Therefore, sensors that have been developed to support unsupervised, feature-poor systems (L3) are likely to find themselves squeezed out of the market. LiDAR vendors in particular are at risk, with only those systems able to meet the stringent redundancy requirements of L4/5 likely to succeed.