Data Center Infrastructure Strategies Reshape as Agentic AI Demand Scales

By Paul Schell |

30 Jan 2026 |

IN-8039

By Paul Schell |

30 Jan 2026 |

IN-8039

Log In to unlock this content.

You have x unlocks remaining.

This content falls outside of your subscription, but you may view up to five pieces of premium content outside of your subscription each month

You have x unlocks remaining.

By Paul Schell |

30 Jan 2026 |

IN-8039

By Paul Schell |

30 Jan 2026 |

IN-8039

NEWSAgentic AI Brings New Requirements for Data Center Infrastructure |

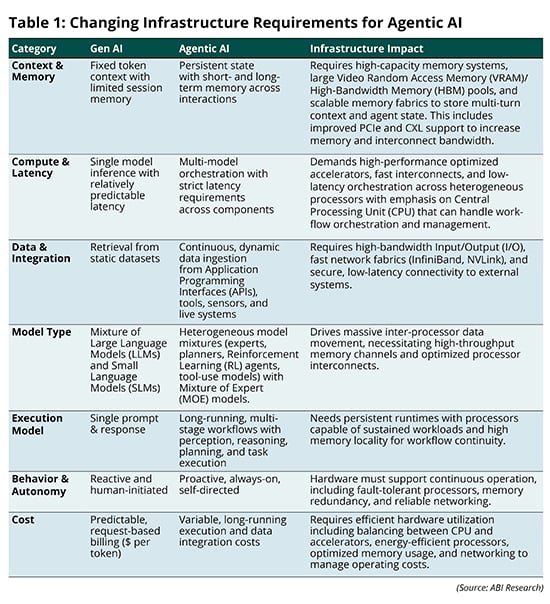

The transition from Generative Artificial Intelligence (Gen AI) to Agentic Artificial Intelligence (AI) is accelerating, with enterprises beginning to deploy and, in some cases, scale Agentic AI use cases. This paradigm shift is set to significantly impact the AI infrastructure market, as the requirements for training and prompt-response Gen AI differ markedly from those needed for autonomous, always-on Agentic AI workflows. Table 1 outlines key architectural differences between Gen AI and Agentic AI and highlights their implications for infrastructure design and performance.

IMPACTCustomers and Vendors Quickly Pivot Strategies |

These architectural shifts put pressure on processors, memory, networking, and system design, which is driving innovation and investment from infrastructure vendors and customers. The most critical transition is centered on the importance of CPUs for Agentic AI. Agentic AI requires coordination across multi-step reasoning and workflows placing significant emphasis on CPUs as a control layer—handling scheduling data movement and synchronization. Removing these workloads from the Graphics Processing Unit (GPU) ensures that they can optimally perform inference. The increasing emphasis on CPUs as the control layer is mirrored by the computer vendor announcements below:

Compute Vendors

- NVIDIA has moved quickly to align its data center platforms with the demands of Agentic AI. The Vera Rubin platform is optimized to accelerate agentic workflows through significant advances in CPU, memory, and interconnect capabilities. NVIDIA’s Arm-based CPUs deliver up to 6X performance improvements for agentic workloads over x86, while the newly introduced BlueField-4 DPU improves throughput and enhances inference performance. Beyond core infrastructure, NVIDIA continues to signal strategic intent through partnerships focused on memory and data access. Vast Data, running natively on BlueField-4 DPUs, enables NVIDIA’s inference context memory storage platform, allowing high-speed inference context sharing across nodes and supporting context localization for persistent, multi-turn reasoning across agents.

- AMD is also reframing its infrastructure strategy to better support Agentic AI with the Venice CPU. As part of the Helios AI Platform and paired with the MI455X GPU, Venice delivers up to 70% performance improvements over prior generations, driven by support for PCIe 6.0, increased DDR5 memory bandwidth, and expanded CXL lane capacity.

Compute Customers

- OpenAI is scaling its Agentic AI inference capabilities through a partnership with Cerebras, committing to up to 750 Megawatts (MW) of compute capacity over the next 3 years. This initiative is intended to accelerate the expansion of inference capacity using more cost-effective solutions, while also diversifying OpenAI’s infrastructure strategy beyond NVIDIA-centric deployments.

- Hyperscalers such as AWS, Google Cloud, and Microsoft are increasingly deploying a higher proportion of custom Application-Specific Integrated Circuits (ASICs) optimized for Agentic AI inference relative to NVIDIA GPUs. In this context, Microsoft has launched its second-generation AI inference chip, Maia 200, signaling that it will be primarily used for Agentic AI inference workloads due to its emphasis on HBM optimized for MOE models.

RECOMMENDATIONSAgentic AI Push Creates Opportunities for NVIDIA Challengers, but How Can They Capitalize? |

NVIDIA has long been the gold standard in AI infrastructure, and its leadership is unlikely to be displaced overnight, especially given continued investment and innovation targeting Agentic AI inference. However, the industry’s transition from large-scale LLM training to always-on, Agentic AI inference introduces new constraints and requirements that present meaningful opportunities for challengers such as Intel, Cerebras, SambaNova, Qualcomm, Rebellions, and others.

To compete effectively, challengers must design systems specifically for agentic inference, rather than repurposing training-optimized hardware that many have focused on already. Part of this requires a re-thinking of the role of the CPU, and the role it plays within the AI system—especially given the need for workload orchestration and management.

Key areas of differentiation include CPUs, memory bandwidth and capacity, interconnect efficiency, inference-specific acceleration, and support for persistent context across agents. Crucially, innovation must be aligned with customer Key Performance Indicators (KPIs), where Total Cost of Ownership (TCO) is the dominant concern. This encompasses power efficiency, cooling density, system utilization, and workload-specific latency. Platforms that deliver consistent inference performance per dollar and can be scaled linearly will be best positioned to gain traction as inference volumes grow. ABI Research recommends that challengers focus on three core areas:

- Ecosystem and Partner Integration: NVIDIA’s market strength is reinforced by its broad and tightly integrated ecosystem spanning software libraries, system vendors, networking, and storage partners. For challengers, competing at the silicon level alone will be insufficient. Effective Agentic AI systems are increasingly constrained by memory access and data movement, making close collaboration across the stack essential. Partnerships with memory, storage, and data platform providers can enable deeper system-level optimization, improving context sharing, reducing latency, and lowering operational costs.

- Customer and Commercial Alignment: As hyperscalers continue to move inference workloads in-house using custom ASICs, the addressable market for the challengers is shifting. Growth opportunities are increasingly concentrated among neocloud providers, AI leaders (i.e., Anthropic, Mistral, OpenAI), and enterprises seeking alternatives to GPU-centric deployments. To succeed, challengers must engage early and deeply with these customers to ensure infrastructure roadmaps, deployment models, and commercial terms are aligned. Co-design efforts, predictable pricing, and flexible deployment options will be critical to winning share from incumbent platforms.

- Strategic Positioning and Differentiation: While NVIDIA’s dominance is reinforced by its installed base and ecosystem gravity, challengers can differentiate by focusing on workload-specific optimization, open standards, and simplified system architectures. Emphasizing openness, improvements in memory capabilities, or architecture designed explicitly for inference rather than training can resonate with customers seeking lower cost, reduced complexity, or vendor diversification. Novel approaches (e.g., wafer-scale inference engines, dataflow-optimized architectures, or memory-centric system designs) demonstrate that meaningful innovation remains possible outside the traditional GPU paradigm.

Written by Paul Schell

Related Service

- Competitive & Market Intelligence

- Executive & C-Suite

- Marketing

- Product Strategy

- Startup Leader & Founder

- Users & Implementers

Job Role

- Telco & Communications

- Hyperscalers

- Industrial & Manufacturing

- Semiconductor

- Supply Chain

- Industry & Trade Organizations

Industry

Services

Spotlights

5G, Cloud & Networks

- 5G Devices, Smartphones & Wearables

- 5G, 6G & Open RAN

- Cellular Standards & Intellectual Property Rights

- Cloud

- Enterprise Connectivity

- Space Technologies & Innovation

- Telco AI

AI & Robotics

Automotive

Bluetooth, Wi-Fi & Short Range Wireless

Cyber & Digital Security

- Citizen Digital Identity

- Digital Payment Technologies

- eSIM & SIM Solutions

- Quantum Safe Technologies

- Trusted Device Solutions