Agentic AI Becomes Increasingly Distributed as Edge AI’s Value Proposition Addresses Latency, Cost, Reliability, and Data Security Concerns

28 Oct 2025 | IN-7964

Log In to unlock this content.

You have x unlocks remaining.

This content falls outside of your subscription, but you may view up to five pieces of premium content outside of your subscription each month

You have x unlocks remaining.

28 Oct 2025 | IN-7964

Vendors Across the Ecosystem Announce Products That Support Distributed Agentic AI |

NEWS |

Agentic Artificial Intelligence (AI) continues to mature. Vendors across the ecosystem are shifting toward productization, and enterprise adoption is beginning to accelerate. Standards (e.g., Model Context Protocol (MCP)) and ecosystem collaboration are enabling the development of agentic systems that can integrate multiple different “agents” and applications/tools. This will lead to the eventual proliferation of multi-vendor, multi-agent systems, made up of multiple intelligence engines (Small Language Models (SLMs)) tuned to specific use cases.

To date, Agentic AI has been reliant on cloud processing, but given that enterprise data and applications are highly distributed and often siloed within certain infrastructure, this is not the most effective deployment. Most enterprises are heavily dispersed with data and applications in various locations and regions. This necessitates a shift toward hybrid or distributed Agentic AI. Some vendors are starting to recognize the necessity for a shift away from the cloud:

- Qualcomm is positioned as an enabler of “hybrid” Agentic AI with new Snapdragon releases (8 Elite Gen 5 for smartphones, X2 Elite for PC). These chips feature Neural Processing Units (NPUs) & Central Processing Units (CPUs)/Graphics Processing Units (GPUs) for AI processing, which it demoed with Agentic AI running locally, but with connectivity to the cloud for more complex reasoning tasks.

- Red Hat launched Red Hat AI 3, a hybrid AI platform to support agentic inference on any hardware from data center to edge. This includes a unified Application Programming Interface (API) layer (Llama Stack) and MCP to enable model interoperability and integration with external tools.

- Lenovo’s announcement of agentic capabilities across its AI-enabled workforce portfolio emphasized hardware enablement with Lenovo’s AI Personal Computer (PC) featuring a dedicated AI processor to run small Foundation Models (FMs) locally to support “certain” agentic operations without network connectivity.

- Intel (longstanding leader in hybrid AI) showcased a demo of hybrid Agentic AI browsing and PowerPoint Generation at its Technology Tour in Arizona. This solution, enabled by Panther Lake, leverages local and cloud capacity to improve latency, data privacy, and reduce costs.

Even NVIDIA recognizes that centralized cloud deployments are not the only way to run inference at scale. Building on its existing edge computing solutions, it has recently started shipping DGX Spark, which provides developers with desktop fine-tuning and inferencing capacity for models up to 200B parameters through a GB10 superchip with 128 Gigabytes (GB) of memory. There is some market interest for leveraging DGX Spark for on-premises product use cases, but challenges exist, such as heat dissipation. In addition, there is increasing demand for RTX Pro 6000, which is specialized for on-premises enterprise AI, as well as its AI PC portfolio with RTX cards. The recent partnership with Intel announcing that x86 Systems-on-Chip (SoCs) will integrate RTX GPU chiplets is another example of the increasing importance of distributed infrastructure.

Why Does Distributed Agentic AI Inference Make More Sense? |

IMPACT |

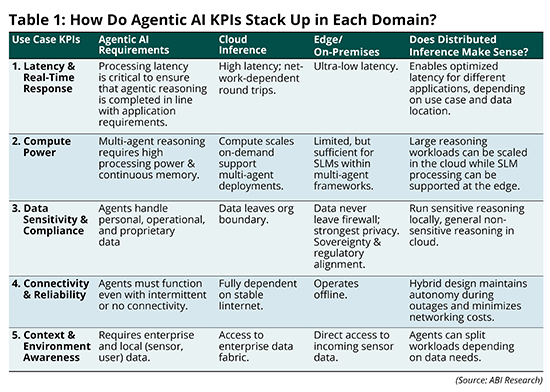

ABI Research expects that distributed Agentic AI frameworks will be necessary to support enterprise adoption. Table 1 highlights how distributed solutions can effectively align with enterprise Agentic AI Key Performance Indicators (KPIs):

Given that enterprises are, for the most part, highly distributed entities, it makes sense to build agentic systems that replicate this from a technical standpoint—as it optimizes latency, compute access, security, connectivity requirements, and data access. It can also lead to more cost-effective operations:

- Cost: Cloud brings scalable Platform-as-a-Service (PaaS) model, but has high costs at scale. While edge deployments incur lower price per inference, with limited/no networking costs.

- Power Consumption: Reduces total energy footprint with efficient local processing, while large reasoning models utilize energy-intensive cloud resources less optimized for an individual organization’s unique system requirements/needs.

- Lock-in: Cloud environments bring high ingress and egress fees, and a certain amount of risk due to dependency that can be partially mitigated through distributed deployments.

New Distributed Agentic AI Paradigm Brings Opportunities & Challenges for Market Shareholders |

RECOMMENDATIONS |

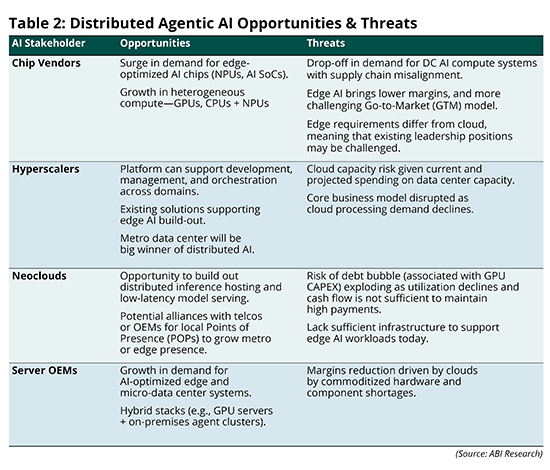

Shifting toward distributed Agentic AI will help enterprises effectively scale, overcoming technical issues and optimizing commercial models. As enterprises increase spending on Agentic AI, the growth in demand for distributed deployments leveraging cloud, on-premises, and edge infrastructure will create significant challenges for the cloud-focused supply chain. Table 2 highlights the biggest threats and opportunities for stakeholders.

All vendors in the data center supply chain will see risk in distributed Agentic AI. However, none more so than neoclouds that have focused on building large training clusters. They have taken on a great deal of debt to support GPU build-out. These large training clusters are mostly unsuitable for agentic inferencing, and if the market moves quickly away from inference in the cloud, utilization rates will plummet, hitting cash flow, and potentially exploding their mounting debt bubbles. Companies betting big on data center growth need to recognize the potential impact of distributed inference and start to develop effective medium- to long-term strategies to align with this likely market shift.

Related Service

- Competitive & Market Intelligence

- Executive & C-Suite

- Marketing

- Product Strategy

- Startup Leader & Founder

- Users & Implementers

Job Role

- Telco & Communications

- Hyperscalers

- Industrial & Manufacturing

- Semiconductor

- Supply Chain

- Industry & Trade Organizations

Industry

Services

Spotlights

5G, Cloud & Networks

- 5G Devices, Smartphones & Wearables

- 5G, 6G & Open RAN

- Cellular Standards & Intellectual Property Rights

- Cloud

- Enterprise Connectivity

- Space Technologies & Innovation

- Telco AI

AI & Robotics

Automotive

Bluetooth, Wi-Fi & Short Range Wireless

Cyber & Digital Security

- Citizen Digital Identity

- Digital Payment Technologies

- eSIM & SIM Solutions

- Quantum Safe Technologies

- Trusted Device Solutions