Technology Evolution and Market Dynamics

|

NEWS

|

The Current Market Status and Memory Wall Ahead

Low-Power Double Data Rate 5 (LPDDR5) and LPDDR5X represent the current state-of-the-art mobile Dynamic Random Access Memory (DRAM), delivering theoretical bandwidths of up to 85.6 Gigabits per Second (Gbps) and capacity between 16 Gigabytes (GB) and 64 GB. They are deployed across nearly all premium and flagship smartphones, including the iPhone 16 Pro, Samsung Galaxy S24 Ultra, Samsung Galaxy S25, Google Pixel 9 Pro, and other leading Android devices from Xiaomi, OnePlus, OPPO, vivo, HONOR, Sony, and Motorola. However, the next wave of Artificial Intelligence (AI) workloads will quickly saturate both memory capacity and bandwidth.

For instance, a 7B parameter model may require between 3 GB and 10 GB just for model weights, depending on the quantization precision required (4-bit, 8-bit, or 16-bit), which could challenge the total phone memory capacity. In addition, inference working in the background consumes a significant amount of memory for runtime overheads (e.g., tensor activation and attention mechanisms and intermediate computations). This means a single interaction with an on-device AI agent could consume GB+ of additional memory. The multimodality required for agentic systems will make it worse, as the autonomous operations of these systems may combine audio, image, and text processing simultaneously, creating contention across multiple demanding workloads.

Memory bandwidth is another critical constraint, given the growing disparity between processor and memory performance. While the performance of the computing fabric with AI acceleration is improving at a pace of 30% to 50% annually, memory bandwidth and latency have only improved at rates not exceeding 10% per year. This mismatch is creating what the industry commonly calls the "memory wall," leading to increased inefficiencies as the processor fabric spends an increasing amount of time waiting for data to get in and out of the memory fabric. These inefficiencies could damage the overall AI experience on mobile devices and manifest themselves in device overheating and thermal throttling when the memory bandwidth cannot cope with compute intensity during the execution of AI workloads. Increased energy consumption could be another issue; as with advanced AI workloads, the energy cost of moving data between the memory and the compute fabric could exceed the energy cost of computation of these workloads.

To illustrate, consider the example of image generation tasks using models like Stable Diffusion. These tasks typically require a sustained memory bandwidth of 4 to 6 Gbps. While this may appear well within LPDDR5X's capabilities, it represents a constant, heavy workload that must be maintained over time—unlike the short bursts of activity most memory systems are optimized for. When such demanding tasks run continuously, they place significant stress on the memory subsystem, leading to overheating, reduced power efficiency, and throttled performance. This gap between theoretical and practical performance makes current memory systems a bottleneck for running Generative Artificial Intelligence (Gen AI) applications on mobile devices.

Similarly, running Large Language Models (LLMs) like Meta's Large Language Model Meta AI (LLaMA) 2 7B on a mobile device—even when optimized—yields relatively modest output rates not exceeding 20 tokens per second, not because the processor is too slow, but because the memory cannot deliver data fast enough to keep up. As the next wave of AI use cases will increasingly involve stable diffusion, multimodal input, and Agentic AI systems for task automation, on-device video generation, image-to-video generation, and real-time audio and content translation, memory bandwidth will become an even more critical factor in overall system performance.

Next-Generation Memory Standards in Development

To address this growing bottleneck, the memory industry is moving in two directions: evolutionary and revolutionary.

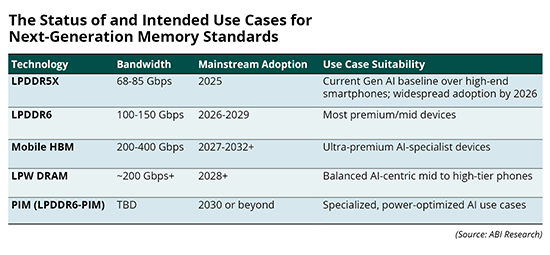

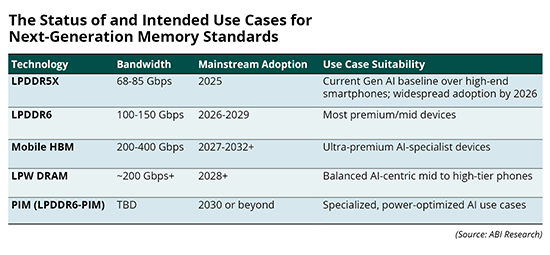

LPDDR6, standardized by the Joint Electron Device Engineering Council (JEDEC) in 2024, represents the evolutionary path. It will offer transfer speeds ranging from 8,800 to 17,600 Mega Transfers per Second (MT/s) or around 100 Gbps to 150 Gbps—25% to 50% more than that of LPDDR5X, depending on implementation. Backed by the incumbent memory suppliers Samsung, SK Hynix, and Micron, LPDDR6 is designed for better burst performance, improved energy per bit transferred, and scalable integration with future Systems-on-Chip (SoCs). Engineering samples are expected by late 2025, with first commercial deployments in late 2026 at the earliest and broader flagship adoption by 2028.

The more radical approach is Mobile High Bandwidth Memory (HBM). Adapted from data center Graphics Processing Units (GPUs) and AI accelerators, Mobile HBM could offer a performance in the range of 400 Gbps and can reach over 800 Gbps—10X the bandwidth of LPDDR5X or more. This leap enables continuous, large-context LLM inference, high-frame-rate multimodal generation, and persistent agentic processes to operate natively on-device. However, the challenges are significant: HBM consumes more power, requires complex Three-Dimensional (3D) packaging, has higher die height, and costs 4X to 6X more than LPDDR, which makes the technology extremely difficult to implement on traditional smartphones.

Despite these challenges, it appears that major memory vendors are progressing aggressively. For instance, Apple is rumored to be planning to adopt Mobile HBM for its 2027 iPhone model, which may coincide with the 20th anniversary of the iPhone brand. It is also rumored that SK Hynix has already begun developing mobile HBM stacks for mobile SoCs using its Vertical Fan Out (VFO) technology. Samsung may be leveraging its Vertical Cu post-Stack (VCS) technology to port HBM to mobile implementations, while Micron is pursuing a differentiated approach targeting thermally optimized high-bandwidth layers. Huawei is another company rumored to be the furthest in mobile HBM development, with an implementation roadmap targeting 2027 deployments via partnerships with JCET, Semiconductor Manufacturing International Corporation (SMIC), and Wuhan Xinxin Semiconductor Manufacturing Co., Ltd. (XMC).

In parallel, hybrid and emerging architectures are also gaining attention. Low-Power Wide DRAM (LPW DRAM) is under development at Samsung, reportedly in collaboration with Apple. It offers a middle ground between LPDDR and HBM, targeting ~200 GB/s bandwidth with simplified stacking and improved thermal characteristics. At the International Solid-State Circuits Conference (ISSCC) 2025, Samsung's Chief Technology Officer (CTO) confirmed that the first mobile products featuring LPW DRAM are expected to launch in 2028, optimized for mobile on-device AI workloads. Processing-in-Memory (PIM) is also re-emerging as a long-term solution to reduce data movement, potentially integrating limited compute directly into DRAM modules. These architectures are unlikely to appear commercially before 2030 at scale, but are being seriously explored as AI workloads become increasingly memory-centric.

Market Drivers and Industry Impact

|

IMPACT

|

The primary driver of this memory transformation is the dramatic scaling of on-device AI. ABI Research projects that, by 2030, over 95% of smartphones will support on-device Gen AI features, including LLM-powered assistants, real-time translation, summarization, and image or video generation, and most importantly, the emergence of Agentic AI systems. These features are not just computationally demanding—they are memory-bound in both bandwidth and latency.

This evolution is creating new tiers of product differentiation. Entry-level AI smartphones may continue to rely on LPDDR5X with limited Gen AI capabilities, while mid-to-premium segments will move toward LPDDR6. A new ultra-premium category—what some refer to as “AI workstation phones”—will emerge, targeting creators, developers, enthusiastic users, and enterprise use cases. These devices could feature dual-tier memory architectures, including mobile HBM for high-speed AI tasks and LPDDR6 for general-purpose functions.

Chipmakers are already reacting to this shift. Qualcomm is expected to integrate optimized memory controllers for LPDDR6 and improved bandwidth routing for its forthcoming Snapdragon 8 Elite Gen 2, likely to be announced at the Snapdragon Summit in September 2025. MediaTek and Samsung's Exynos platforms are also investing in memory co-design to align with Gen AI workloads. These changes are increasingly shaping SoC roadmaps, moving memory bandwidth from a cost consideration to a strategic design factor.

Geopolitical dynamics are also influencing market strategies. The memory supply chain is heavily concentrated in South Korea, Taiwan, and Japan, to a certain extent, raising concerns over supply resilience and export control policies. China is responding by ramping up domestic memory production through ChangXin Memory Technologies Inc. (CXMT) and other national champions. Huawei is using its vertically integrated strategy to potentially leapfrog Western players in memory innovation, especially where mobile HBM is concerned. This could disrupt the competitive balance and accelerate segmentation in global supply chains.

Strategic Implications, Recommendations, and Conclusion

|

RECOMMENDATIONS

|

The emergence of Gen AI and agentic systems marks a once-in-a-decade inflection point in smartphone design, and memory is at the core of this disruption. The next 5 years will determine which technologies and players shape the competitive landscape for mobile AI.

For memory manufacturers, the imperative is twofold: accelerate LPDDR6’s readiness for mainstream integration by 2026, and aggressively pursue packaging and thermal innovation to make mobile HBM viable within mobile form factors. Strategic partnerships with SoC vendors will be key to aligning performance and power profiles.

Chipset developers must re-architect memory subsystems to minimize bandwidth bottlenecks. This includes implementing wider memory buses, improving scheduling algorithms, and eventually exploring support for hybrid DRAM-HBM systems. Early access to next-gen memory samples will competitively differentiate benchmark performance and user experience.

OEMs and smartphone brands need to rethink product tiering and supply strategies. Flagship and ultra-premium models must now consider memory as a defining feature. Dual sourcing of memory and stronger integration with platform providers will be necessary to manage both risk and innovation timelines. Companies that view memory as a commodity will fall behind as performance gaps widen in AI workloads.

In conclusion, the battle for AI performance leadership is shifting beyond accelerated computing Tera Operations per Second (TOPS), and into the memory subsystem. Technologies like LPDDR6, mobile HBM, and LPW DRAM will enable the next generation of AI-native experiences—from multimodal agents to real-time generative content. The memory choices made in 2025 and 2026 will define the landscape of next-generation smartphones and new AI-native mobile device form factors for the next decade. Those aligning early and building strategic depth in memory architecture will emerge as leaders in the AI-powered mobile era.