NVIDIA Is Going Too Fast for the Telco Market

By Dimitris Mavrakis |

02 Jun 2025 |

IN-7844

By Dimitris Mavrakis |

02 Jun 2025 |

IN-7844

Log In to unlock this content.

You have x unlocks remaining.

This content falls outside of your subscription, but you may view up to five pieces of premium content outside of your subscription each month

You have x unlocks remaining.

By Dimitris Mavrakis |

02 Jun 2025 |

IN-7844

By Dimitris Mavrakis |

02 Jun 2025 |

IN-7844

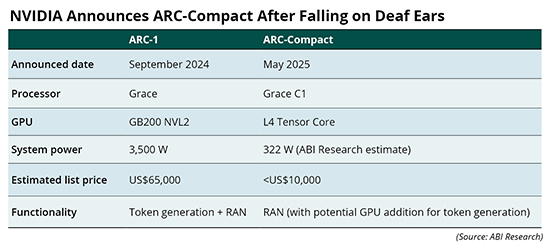

NVIDIA Announces ARC-Compact After Falling on Deaf Ears |

NEWS |

NVIDIA announced the Aerial RAN Computer (ARC)-Compact in May 2025, 9 months after having announced its much bigger ARC-1 server. The new server is a much smaller version of the ARC-1 and is positioned for Radio Access Network (RAN) workloads only, rather than both Generative Artificial Intelligence (Gen AI) and RAN processing concurrently. Table 1 compares the two servers directly.

According to ABI Research discussions and primary research with key decision makers for network deployments, the ARC-1 was considered an expensive and power-hungry appliance to be deployed in the network, without a clear value proposition. Token generation at the cell site, at an aggregation point or even a core network location is a not yet proven business case, as Artificial Intelligence (AI) training and inference workloads remain centralized due to fierce competition between hyperscalers and neocloud providers. The few telcos that are active in Gen AI today have chosen to partner with neoclouds providers, rather than deploy their own Graphics Processing Units (GPUs), including Verizon with Vultr and SK Telecom with Lambda. There are cases of telcos that are deploying their own GPUs in-house, including SoftBank, but these are exceptions.

It could be concluded that because ARC-1 was deemed too expensive, power-hungry, and overpowered for the cellular network, NVIDIA announced the ARC-Compact to enable a more moderate deployment for GPUs throughout the cellular network. However, ARC-Compact is now facing a different problem.

No More "Build It and They Will Come" |

IMPACT |

Telcos are currently operating with a business model of single-digit profit margins and cannot invest significantly in building new features in their networks without a clear Return on Investment (ROI). The “build it and they will come” mentality has failed so many times, it has created an extreme aversion to capital risk. It is not likely that any significant buildouts will take place in the next few years, especially without a clear business and revenue opportunity. The original ARC-1 did introduce new potential opportunities, with token generation at the edge, albeit with an unproven and theoretical business case. The ARC-Compact takes the new opportunity out of the discussion and just aims to replace traditional baseband servers, most of which will already have been deployed in the telco network.

According to ABI Research estimates, vendor data sheets, and NVIDIA announcements, the ARC-Compact consumes approximately 12.88 Watts per Gigabits per Second (W/Gpbs), whereas a modern baseband server from a Tier One infrastructure vendor consumes 13.25 W/Gbps. It should be noted that the NVIDIA power consumption data are derived from lab tests, whereas the Tier One vendor data are from live networks. Moreover, the price point of the two servers is expected to be comparable, both of which are in the US$5,000 to US$10,000 price range. But the question is, why should telcos refresh their cellular networks? For the promise of Gen AI at the edge in the future? Or to potentially save on energy costs, assuming the ARC-Compact can deliver what it promises.

The ARC-1 and the ARC-Compact are part of the same vision and are not competing against each other. The ARC-1 will likely be deployed at a centralized, busy location to manage both AI and RAN workloads, whereas the latter would be positioned at the cell site for quieter areas. Nevertheless, neither the ARC-1 nor the ARC-Compact seems to be creating waves of enthusiasm in the industry, so NVIDIA must package these servers in a different manner and appeal to business decision makers.

NVIDIA Is Playing a Long Game, but It Needs Partners |

RECOMMENDATIONS |

Deploying an ARC-Compact instead of a Tier One vendor baseband shifts the lock-in from the latter’s proprietary custom silicon to CUDA, meaning that large infrastructure vendors will lose their unique value in the physical layer where processing takes place in near-real time. This assumes NVIDIA’s Aerial RAN can match the performance and energy efficiency of custom silicon. There are benefits to this, namely the utilization of any GPU in NVIDIA’s lineup or even the utilization of NIMs or other advanced NVIDIA software tools. This can allow telcos to completely break free from custom silicon, but depend on NVIDIA GPUs. After all, CUDA does not support third-party hardware.

NVIDIA’s development model in the Information Technology (IT) industry is continuous and relentless innovation without any competition. NVIDIA has been announcing new GPUs every year, with billion-dollar contracts for each of these GPUs as soon as they are announced. However, the telco development model is more time-consuming, as equipment needs to be vetted for carrier-grade operation and 99.999% uptime. NVIDIA cannot convince telcos to shift their legacy deployment model to GPUs alone, especially in the current economic environment and stage of 5G rollouts. However, NVIDIA’s GPU-based RAN offers merits, especially for the future that includes 6G.

NVIDIA must partner with large infrastructure vendors, namely Ericsson, Nokia, and Samsung, and stimulate these vendors to port their Layer-1 (physical layer) software to CUDA, even at a measured pace to start with. Only then will its value proposition become a serious contender, when large vendors have an interest in playing a role in this transition. NVIDIA must also provide tangible new business models and blueprints for telcos to utilize, rather than hope that telcos will operate in a “build it and they will come” basis.

Written by Dimitris Mavrakis

Related Service

- Competitive & Market Intelligence

- Executive & C-Suite

- Marketing

- Product Strategy

- Startup Leader & Founder

- Users & Implementers

Job Role

- Telco & Communications

- Hyperscalers

- Industrial & Manufacturing

- Semiconductor

- Supply Chain

- Industry & Trade Organizations

Industry

Services

Spotlights

5G, Cloud & Networks

- 5G Devices, Smartphones & Wearables

- 5G, 6G & Open RAN

- Cellular Standards & Intellectual Property Rights

- Cloud

- Enterprise Connectivity

- Space Technologies & Innovation

- Telco AI

AI & Robotics

Automotive

Bluetooth, Wi-Fi & Short Range Wireless

Cyber & Digital Security

- Citizen Digital Identity

- Digital Payment Technologies

- eSIM & SIM Solutions

- Quantum Safe Technologies

- Trusted Device Solutions