Hyperscalers Face a New AI Hardware Dilemma: Deploy Off-the-Shelf AI Infrastructure or Invest in Custom Solutions

By Reece Hayden |

06 Jan 2025 |

IN-7667

By Reece Hayden |

06 Jan 2025 |

IN-7667

Log In to unlock this content.

You have x unlocks remaining.

This content falls outside of your subscription, but you may view up to five pieces of premium content outside of your subscription each month

You have x unlocks remaining.

By Reece Hayden |

06 Jan 2025 |

IN-7667

By Reece Hayden |

06 Jan 2025 |

IN-7667

Marvell's Custom AI Strategy Is Starting to Pay Off |

NEWS |

NVIDIA have dominated the boom in AI data center capacity over the last few years with revenues increasing by over 4x. This juggernaut has built its market leadership through ‘off-the’shelf’ AI systems with leading compute, networking and the ability to ‘optimize’ solutions through CUDA-X libraries. But internal and external pressures on the data center, especially hyper scaler, market mean that some are questioning if ‘off-the-shelf’ products will be sufficient:

- Increasingly homogenous solutions: with nearly all AI data center vendors deploying NVIDIA hardware, competitors are struggling to differentiate their offering based on value. Instead, the market is moving towards a price war, through which competitors look to undercut costs to differentiate.

- Local power constraints: any data center is reliant on the local power grid. As hyper scale data centers grow, increasingly governments will become opposed to providing power capacity comparable to small cities. This will constrain the size and location of data centers developed.

- Rapidly expanding operational costs: power efficiency and resource utilization continue to struggle in data centers as systems are not optimized for specific workloads. This is creating cost efficiency problems within hyper scale facilities, which will rapidly spiral out of control as AI data center capacity continues to grow at a rapid rate.

Solving these challenges may require vendors to go deeper and provide customized AI solutions for the data center market. Marvell certainly thinks so and has built its data center strategy around customization. Targeting the hyper scale market, they have developed custom compute (XPU, CPU, DPU), switching, and interconnect which supports both scale up and scale out data center architectures. In addition, they are providing further customization targeting high bandwidth memory with Micron, Samsung, and SK Hynix.

This approach has enabled them to rapidly grow their data center business from $0.5bn to $1.5bn in 1-year. This rapid growth means that in the first 3 quarters of FY25, 71% of their revenue came from the data center segment. Partnerships with AWS on accelerated infrastructure and Meta on custom NICs for the Open Compute Project have been key drivers of this rapid growth. Marvell does not see this market slowing down with ambitious revenue projections for FY26 of <$2.5bn. This expansion will be supported with 80% of their R&D budget spent on this market.

New Debate Between Custom and "Off-the-Shelf" Hardware |

IMPACT |

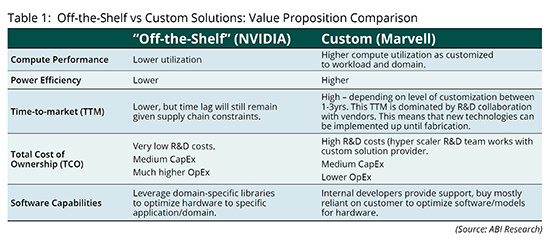

Marvell claims that they are not directly competing with NVIDIA but are instead working with NVIDIA to expand the data center TAM. But from the hyper scale perspective, it is important to consider the debate between custom and “off-the-shelf” hardware and understand the role each will play in the market. The table below explores the relative value of these different solutions.

Custom solutions offer greater efficiency, which will be highly prized in a capacity- and power-constrained market. However, the scale of demand for infrastructure from hyperscalers like AWS, Azure, and Google means that custom solutions will not always be suitable or even possible. This means that NVIDIA will remain dominant in terms of overall market share. But the allure of custom solutions means that Marvell’s position in the market will continue to expand (as it predicts). External constraints (e.g., local power grid) will drive demand for more efficient customized solutions. Meanwhile, the necessity to diversify away from a single sourcing framework and increasing need to differentiate from competitors will mean that NVIDIA’s hyperscaler customers will look for alternative solutions.

Marvell Will Face Challenges, but ABI Research Believes That It Has Put Its "Chips" in the Right Market |

RECOMMENDATIONS |

Given the size of the AI data center total addressable market (TAM), Marvell are in a great position to continue to grow their business. But this is not to say that they will not face challenges, Some of which will be shared by competitors – supply chain fragility, slowing demand for training as vendors transition to inference – but some are specific to their custom approach to the market:

- Customer constrained: the scale necessary to make custom AI systems at a reasonable cost per unit, and to demand foundry capacity within high-end TSMC fabs means that Marvell can only target ‘hyper scale’ customers with sufficient volume demand. Although constrained, the number of hyper scalers is certainly not fixed with companies like OpenAI potentially ramping up AI infrastructure CapEx to join the ‘hyper scale’ club.

- Time-to-market: AI data center capacity continues to grow rapidly, and hyper scalers want to move quickly to satiate demand for training and inference. However, building custom AI solutions take between 1-3 years depending on level of customization. Shipments of NVIDIA hardware does take time, but the lag will not be as noticeable.

- Operational scale: building custom hardware is time and talent consuming, as Marvell’s custom solutions team need to work closely with their partner-customer across a 1–3-year time frame to build customized solutions. This places a constraint on the number of customers that Marvell can support & effectively manage. Scaling custom capacity will not be easy requiring expensive new engineers.

- Lack of software capabilities: NVIDIA enable domain optimization through the CUDA-X libraries, whilst Marvell provides very limited software level support for their clients.

- Reliance on customer for deployment: unlike off-the-shelf vendors like NVIDIA, Marvell does not have an ecosystem of OEM partners that provide the ‘server’ and act as a channel to market. This means that Marvell is reliant on their own talent and the customer to build the specialized AI systems before deployment in the data center.

Some of these challenges are structural and will always great problems due to their custom strategy. Where Marvell should focus though is on software. NVIDIA have shown substantial domain specific workload performance improvements by using CUDA-X Libraries to optimize hardware to each application. This should be the next focus for Marvell – providing software optimization capabilities to further enhance the performance of the custom AI systems.

Written by Reece Hayden

Related Service

- Competitive & Market Intelligence

- Executive & C-Suite

- Marketing

- Product Strategy

- Startup Leader & Founder

- Users & Implementers

Job Role

- Telco & Communications

- Hyperscalers

- Industrial & Manufacturing

- Semiconductor

- Supply Chain

- Industry & Trade Organizations

Industry

Services

Spotlights

5G, Cloud & Networks

- 5G Devices, Smartphones & Wearables

- 5G, 6G & Open RAN

- Cellular Standards & Intellectual Property Rights

- Cloud

- Enterprise Connectivity

- Space Technologies & Innovation

- Telco AI

AI & Robotics

Automotive

Bluetooth, Wi-Fi & Short Range Wireless

Cyber & Digital Security

- Citizen Digital Identity

- Digital Payment Technologies

- eSIM & SIM Solutions

- Quantum Safe Technologies

- Trusted Device Solutions